Thoughts on Generative AI

There is fear around Generative AI, which can be summed up as:

"Why would someone hire me, if they could give my brief to AI, which will spit out work as good as mine?"

The answer is refreshingly simple:

"Because AI can't spit out work as good as yours."

Why not?

Chat GPT surprised us all by being much smarter than Siri, partially because it was trained on an order of magnitude more data.

But there's a problem... Chat GPT was trained on (almost) the entire web. There is no more data.

It's why AI progress has slowed.

ChatGPT et al are not "good enough" to replace human creatives, or programmers, or drivers.

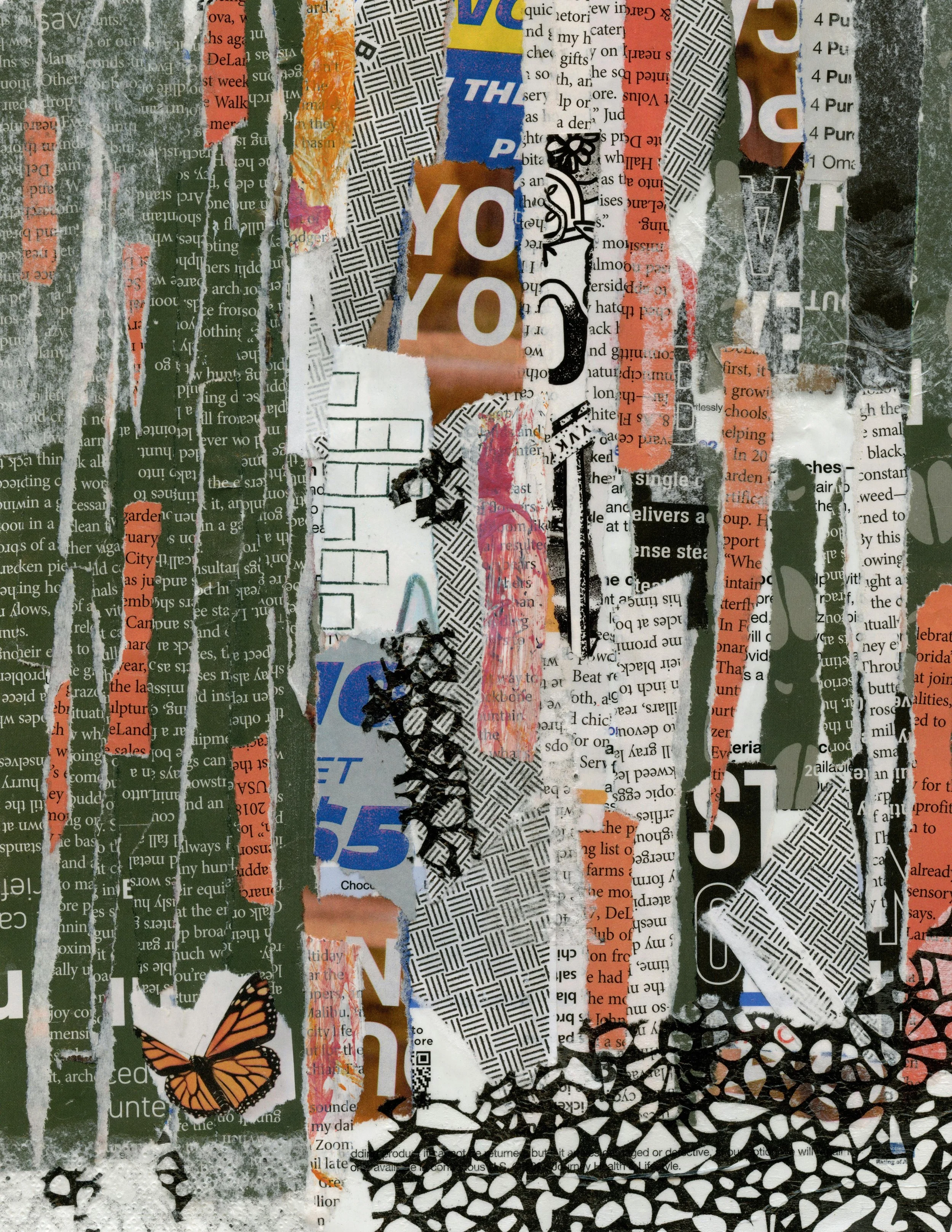

I find it difficult to articulate what is lacking with AI generated design, but anyone can see that real artwork looks somehow better, more alive.

You can train AI on AI generated art, but you quickly run into the problem of data quality and hallucination making its way into the training data. Evidently something real is lost when using AI.

The idea that AI art is not only “good art”, but so good that artists will all ditch conventional tools in favour of prompt-machines, is not grounded in reality.

If Anchor Animation needs a designer or animator, we hire one, because the results are better than a machine. Financially, it's in our interest for a robot to do the work, but the results simply aren't there.

The Amends Problem

AI isn't as good as a real artist

AI can't read your mind

The creative process is fluid

For these 3 reasons, you need to give feedback, but AI can’t cope with it.

I spoke to a client before Christmas about AI. They assumed all managers would be tasked with generating content themselves, using AI.

Their hands-on experience with AI proved this was not going to be the case. The AI simply wouldn’t listen to them: it changed things they didn’t want changed, and didn’t execute the changes they requested.

Anchor Animation had to step in to finish the work.

The Originality Problem

There's a third problem: AI can't be original. It can only rehash what has gone into it.

Imagine if Wes Anderson was starting his career today. Generative AI exists but has never been trained on any of his work, because he's only just started. Using Generative AI, could Wes Anderson create content in his style? No.

AI doesn't imagine like a human brain imagines. A child can draw a tree from memory.

When trained only on Shutterstock data, AI generates images with fragments of Shutterstock watermark on top.

Tesla’s AI can generate video, but as it has only been fed input from Tesla car cameras, it can only generate roads. The model is dependent on the data that went into it.

It’s clear the AI is rehashing what has gone into it. Useful, yes, but it’s not going to put us all out of a job.

The Ethics Problem

Then we reach the fourth problem: some of the training data is stolen.

There's an ethical problem here, and the public are pushing back more than I expected.

If you use AI, people can tell. AI has a look, and people don’t like it.

Some AI fans claim AI is to art what photography is to painting. This is a bad analogy. A better one would be to compare AI art to photocopying a painting.

Rebecca Salvatore shared an excellent anecdote about her daughter on LinkedIn recently:

"Once we hit the train home, we put her AI-spotting skills to the test with both image and written tests. And she, at 10, can spot AI almost every time. Is that because she's been exposed to it from so young?"

Source: https://www.linkedin.com/in/rebecca-salvatore/ Rebecca Salvatore

When AI Works

I'm not anti-AI as a rule.

I love this quote by John Gruber:

“It is over hyped, but that doesn’t mean it shouldn’t be significantly hyped”.

Source: John Gruber on Episode 439 of The Talk Show

ChatGPT is amazing. It can certainly help you be more productive.

AI can be put to uses such as cancer detection, or improving tools like text-to-speech or translation. We should be thrilled it’s here.

But AI can’t replace a human, and I resent the argument that it can, and that we should be excited it can.

There seem to be a lot of people on LinkedIn who seem thrilled about AI. Not for its potential to improve our lives, but its ability to put people out of a job.

The prospect of mass unemployment in society brings them more joy than the technology.

Anchor Animation has no problem working with artists and clients who use AI - though we don't use it for our projects, and would object to our suppliers using it on our projects.

What Anchor Animation won’t do, is work for people who get joy from the unemployment of others. We would rather go out of business than lose our soul.

Given that the best “AI art” projects are when humans step in at every step of the process and stop the AI from being awful by using traditional tools, I can't help feeling it would be more efficient to cut out the AI completely.

But, what about the future?

"It's the worst the tech will ever be" is a catch-all expression which is always true, so it’s difficult to argue against it.

I'm not saying a computer will never be able to design. I'm saying it can't today and the immediate future doesn't look too rosy for LLMs either.

At best, you can get away with AI generated pieces in a larger production.

The tech bros suggest one prompt will give you an end-to-end product: "You'll go to Netflix, type the kind of TV show you want and it will generate it".

It doesn’t work that way. You'd need another leap akin to the leap from Siri to ChatGPT to achieve this. The problem with that, is there is no more data.

Conclusions

People tend to get emotional when criticising AI. I understand. I agree. But for me, it’s also practical:

The work isn't as good as real creative output.

AI can only generate what already exists creatively, nothing new, as proven when Tesla’s AI - fed only on footage of roads - is only capable of generating videos of roads.

The best it is for artists, is a useful tool - if you can overlook the risk of using stolen data. But there's no clear roadmap for it becoming more than this, and plenty of pushback when audiences spot AI generated pieces.